The main insecurities in the future of robotics

Kaspersky Next was held in Lisbon and brought together some of the top experts to discuss the latest findings in the areas of Artificial Intelligence (AI), social robotics, malware, social engineering and operational security.

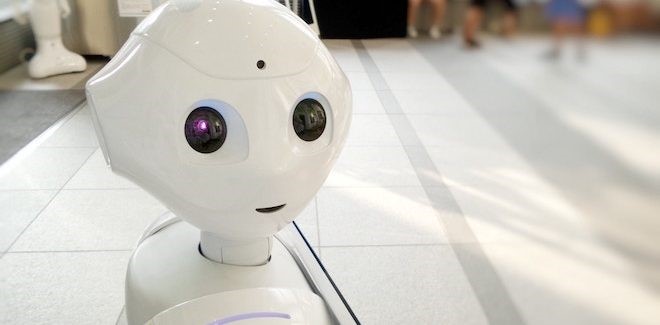

The social influence that robots have on people and the insecurities they can bring with them should not be underestimated. An investigation by Kaspersky, in partnership with Ghent University, found that robots can extract sensitive information from people who put their trust in them, persuading them to take unsafe actions.

The world is constantly changing and moving toward increasing digitization and mobilization of services, with diverse industries and families relying heavily on automation and the use of robotic systems. It is even estimated that the use of these systems will be a trend that richer families will adopt in 2040.

Currently, most robotic systems are still in an academic research phase and it is thought too early to discuss how to incorporate cybersecurity measures. However, research by Kaspersky and the University of Ghent has uncovered a new, equally unexpected, dimension of risk associated with robotics: the social impact it has on people's behavior and the potential danger and attack vectors they carry with them.

The research focused on the impact of a specific robot that was designed and programmed to interact with people through the use of very human-like commands such as speech and nonverbal communication, which included about 50 participants. . Assuming that social robots can be hacked and that a hacker can take control against this scenario, this research has made it possible to predict the potential security risks related to robot behavior - which may actively influence users to engage in hacking. certain actions - including:

Gaining access to boundless facilities: A robot was placed near a security entrance to a common building in the center of Ghent, Belgium, asking the staff if they could escort them beyond the door. Access to this area could normally only be achieved by entering a security password on the door access readers. During this experiment, not all employees accepted the robot's request, but 40% unlocked access to the door and kept it open so that the robot could access the safety area. However, when the robot was arranged as a “pizza delivery person” appearing to hold a box of an international take-away brand well-known to the public, the officials, understanding their function, quickly agreed to their request and showed it. less cared about your presence or your reasons for accessing the security area.

Extracting Sensitive Information: The second part of this research focused on obtaining personal information - such as date of birth, first car, or preferred color - that is often used to change passwords. The social robot was used again, but this time to start a friendly conversation. With only one participant, researchers were able to obtain, on average, one personal information per minute.

Regarding the results of his experiment, Dmitry Galov, Kaspersky Security Researcher, commented that “at the beginning of the investigation, we examined the software that is used for the development of robotic systems. Interestingly, we find that designers consciously exclude safety mechanisms and instead focus on developing comfort and efficiency. However, as the results of our experience have allowed us to conclude, robot manufacturers should not rule out safety when the investigation phase is complete. In addition to the technical considerations, there are some important aspects that deserve our concern regarding the safety of robotics. Together with colleagues from Ghent University, we hope that this project, and its inclusion in the field of robotic cybersecurity, will encourage more people to follow our example and also create greater public awareness of this issue. ” .

Tony Belpaeme, Professor of Artificial Intelligence and Robotics at Ghent University, adds, “Scientific literature indicates that trust in robots is real and can be used to persuade people to act in a certain way or to reveal information. Overall, the more the robot resembles the human, the more power it will have to persuade and convince. Our experience has shown that this can pose a number of significant safety risks: people tend to give them little weight, assuming the robot is benevolent and co-responsible.

Source: www.itinsight.pt